1.How to setup kubernetes dashboard on ubuntu16.04 cluster?

To create kubernetes dashboard follow below link

https://docs.aws.amazon.com/eks/latest/userguide/dashboard-tutorial.html

To deploy the Metrics Server

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.6/components.yaml

kubectl get deployment metrics-server -n kube-system

Deploy the dashboard

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml

root@ip-172-31-43-76:~# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.102.6.123 <none> 8000/TCP 120m

kubernetes-dashboard NodePort 10.99.44.152 <none> 443:32508/TCP 120m

Here a seperate service number was allocated 32508

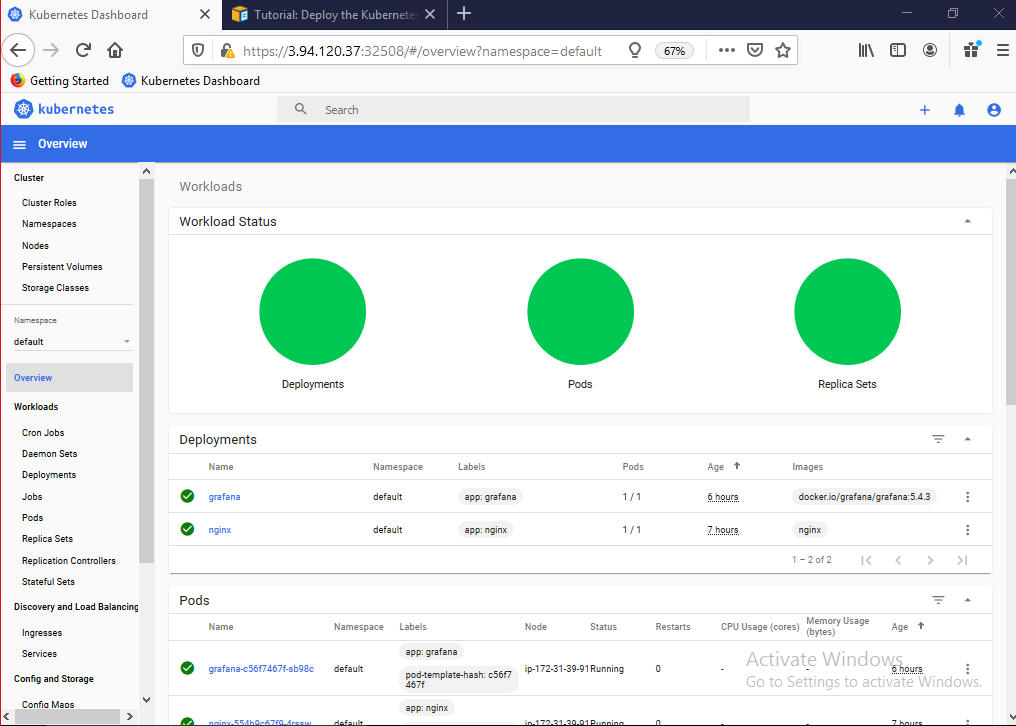

Finally you can check on browser with your master or worker public ip with portnumber

Example:https://3.94.120.37:32508/#!/login

# Create service account

kubectl create serviceaccount cluster-admin-dashboard-sa

# Bind ClusterAdmin role to the service account

kubectl create clusterrolebinding cluster-admin-dashboard-sa \

--clusterrole=cluster-admin \

--serviceaccount=default:cluster-admin-dashboard-sa

# Parse the token

TOKEN=$(kubectl describe secret $(kubectl -n kube-system get secret | awk '/^cluster-admin-dashboardsa-token-/{print $1}') | awk '$1=="token:"{print $2}')

2.What is kubectl?

A. By using kubectl only we are going to call everything from our cluster . kubectl is command line interface and run the commands against cluster.

Example: Kubectl get nodes

kubectl get pods

kubectl get pods --all-namespaces etc.

3.How to monitor docker container, if we are not using kubernetes in the project?

powerful tool to monitor docker containers is 1.DataDog 2. Docker API 3. cAdvisor 4.Scout

4. What is Container Orchestration?

container orchestration means all the services in individual containers are working together to fulfill the needs of a single server .

5.Kubernetes Architecture

To create kubernetes dashboard follow below link

https://docs.aws.amazon.com/eks/latest/userguide/dashboard-tutorial.html

To deploy the Metrics Server

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.6/components.yaml

kubectl get deployment metrics-server -n kube-system

Deploy the dashboard

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml

root@ip-172-31-43-76:~# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.102.6.123 <none> 8000/TCP 120m

kubernetes-dashboard NodePort 10.99.44.152 <none> 443:32508/TCP 120m

Here a seperate service number was allocated 32508

Finally you can check on browser with your master or worker public ip with portnumber

Example:https://3.94.120.37:32508/#!/login

# Create service account

kubectl create serviceaccount cluster-admin-dashboard-sa

# Bind ClusterAdmin role to the service account

kubectl create clusterrolebinding cluster-admin-dashboard-sa \

--clusterrole=cluster-admin \

--serviceaccount=default:cluster-admin-dashboard-sa

# Parse the token

TOKEN=$(kubectl describe secret $(kubectl -n kube-system get secret | awk '/^cluster-admin-dashboardsa-token-/{print $1}') | awk '$1=="token:"{print $2}')

2.What is kubectl?

A. By using kubectl only we are going to call everything from our cluster . kubectl is command line interface and run the commands against cluster.

Example: Kubectl get nodes

kubectl get pods

kubectl get pods --all-namespaces etc.

3.How to monitor docker container, if we are not using kubernetes in the project?

powerful tool to monitor docker containers is 1.DataDog 2. Docker API 3. cAdvisor 4.Scout

4. What is Container Orchestration?

container orchestration means all the services in individual containers are working together to fulfill the needs of a single server .

5.Kubernetes Architecture

In kubernetes we have two components

1.Master 2. worker

1.Master

What is the mani function of kubernetes master?

When we interact with Kubernetes, such as by using the kubectl command-line interface, we are communicating with our cluster's by Kubernetes master.

From master components only we are going to launch our cluster first we can do kubeadm init to intialise all master components

Master components

A. Scheduler B. etcd C. api D.kube controller manager

A.Scheduler

Scheduler main purpose is to schedule how many pods will create and where that pod will be create it will be decided by the scheduler only and it will assign work also to a particular pod.

B. etcd

All the cluster information will be stored inside etcd only.

C. api

Without involve of api we can't talk to master and worker components so every movement will be taken from api only.

2.worker

A.Kubelet B.kubeproxy

A.Kubelet

This is the communication point between master and worker node. kubernetes master will orchestrates worker node .

B.kubeproxy

End user always interact with the worker node by kubeproxy

6. Kubernetes API server

When we interact with your Kubernetes cluster using the kubectl command-line interface, we are actually communicating with the master API Server component.

2.worker

A.Kubelet B.kubeproxy

A.Kubelet

This is the communication point between master and worker node. kubernetes master will orchestrates worker node .

B.kubeproxy

End user always interact with the worker node by kubeproxy

6. Kubernetes API server

When we interact with your Kubernetes cluster using the kubectl command-line interface, we are actually communicating with the master API Server component.

1.API is the central touch point that is accessed by all users, automation, and components in the Kubernetes cluster.

2.API server is an HTTP server

3.API curl

2.API server is an HTTP server

3.API curl

Need to check port number is working or not for curl

root@ip-172-31-18-245:~# netstat -tlpen | grep 8080

tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 0 127934 30585/kubectl

7.To know api details by curl

8.To get all api-versions

9.Kubernetes scheduler

12.How to upgrade kubernetes version from 14 to 15.4?

Kubernetes scheduler will schedule pods onto nodes.

10.While adding worker to the master our kubeadm join token will not work then what we can do if it shows below error?

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

error execution phase preflight: unable to fetch the kubeadm-config ConfigMap: failed to get config map: Unauthorized

Ans: we will get our token By the following command and by this we will connect to the worker node.

kubeadm token create --print-join-command

11.To display image versions on the pods use below command

10.While adding worker to the master our kubeadm join token will not work then what we can do if it shows below error?

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

error execution phase preflight: unable to fetch the kubeadm-config ConfigMap: failed to get config map: Unauthorized

Ans: we will get our token By the following command and by this we will connect to the worker node.

kubeadm token create --print-join-command

11.To display image versions on the pods use below command

kubectl get pods -o=jsonpath='{range .items[*]}{"\n"}{.metadata.name}{":\t"}{range .spec.containers[*]}{.image}{end}{end}' && printf '\n'

ubuntu@master:~$ sudo apt-get update && apt-get install -y kubeadm=1.15.4-00

Hit:1 http://us-east-2.ec2.archive.ubuntu.com/ubuntu xenial InRelease

Get:2 http://us-east-2.ec2.archive.ubuntu.com/ubuntu xenial-updates InRelease [109 kB]

Get:3 http://us-east-2.ec2.archive.ubuntu.com/ubuntu xenial-backports InRelease [107 kB]

Hit:5 http://ppa.launchpad.net/ansible/ansible/ubuntu xenial InRelease

Get:6 http://security.ubuntu.com/ubuntu xenial-security InRelease [109 kB]

Hit:4 https://packages.cloud.google.com/apt kubernetes-xenial InRelease

Fetched 325 kB in 0s (490 kB/s)

Reading package lists... Done

E: Could not open lock file /var/lib/dpkg/lock-frontend - open (13: Permission denied)

E: Unable to acquire the dpkg frontend lock (/var/lib/dpkg/lock-frontend), are you root?

ubuntu@master:~$ sudo apt-get install -y kubeadm=1.15.4-00

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages will be upgraded:

kubeadm

1 upgraded, 0 newly installed, 0 to remove and 19 not upgraded.

Need to get 8,250 kB of archives.

After this operation, 589 kB of additional disk space will be used.

Get:1 https://packages.cloud.google.com/apt kubernetes-xenial/main amd64 kubeadm amd64 1.15.4-00 [8,250 kB]

Fetched 8,250 kB in 0s (11.8 MB/s)

(Reading database ... 84525 files and directories currently installed.)

Preparing to unpack .../kubeadm_1.15.4-00_amd64.deb ...

Unpacking kubeadm (1.15.4-00) over (1.14.0-00) ...

Setting up kubeadm (1.15.4-00) ...

ubuntu@master:~$ kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.4", GitCommit:"67d2fcf276fcd9cf743ad4be9a9ef5828adc082f", GitTreeState:"clean", BuildDate:"2019-09-18T14:48:18Z", GoVersion:"go1.12.9", Compiler:"gc", Platform:"linux/amd64"}

ubuntu@master:~$ kubectl version

Client Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.0", GitCommit:"641856db18352033a0d96dbc99153fa3b27298e5", GitTreeState:"clean", BuildDate:"2019-03-25T15:53:57Z", GoVersion:"go1.12.1", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.10", GitCommit:"575467a0eaf3ca1f20eb86215b3bde40a5ae617a", GitTreeState:"clean", BuildDate:"2019-12-11T12:32:32Z", GoVersion:"go1.12.12", Compiler:"gc", Platform:"lin

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.14.10

[upgrade/versions] kubeadm version: v1.15.4

I0417 11:07:20.745358 9158 version.go:248] remote version is much newer: v1.18.2; falling back to: stable-1.15

[upgrade/versions] Latest stable version: v1.15.11

[upgrade/versions] Latest version in the v1.14 series: v1.14.10

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT AVAILABLE

Kubelet 2 x v1.14.0 v1.15.11

1 x v1.15.0 v1.15.11

Upgrade to the latest stable version:

COMPONENT CURRENT AVAILABLE

API Server v1.14.10 v1.15.11

Controller Manager v1.14.10 v1.15.11

Scheduler v1.14.10 v1.15.11

Kube Proxy v1.14.10 v1.15.11

CoreDNS 1.3.1 1.3.1

Etcd 3.3.10 3.3.10

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.15.11

Note: Before you can perform this upgrade, you have to update kubeadm to v1.15.11.

____________________________________________________________________

ubuntu@master:~$ sudo kubeadm upgrade apply v1.15.11

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade/version] You have chosen to change the cluster version to "v1.15.11"

[upgrade/versions] Cluster version: v1.14.10

[upgrade/versions] kubeadm version: v1.15.4

[upgrade/version] FATAL: the --version argument is invalid due to these errors:

- Specified version to upgrade to "v1.15.11" is higher than the kubeadm version "v1.15.4". Upgrade kubeadm first using the tool you used to install kubeadm

Can be bypassed if you pass the --force flag

ubuntu@master:~$ sudo kubeadm upgrade apply v1.15.4

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade/version] You have chosen to change the cluster version to "v1.15.4"

[upgrade/versions] Cluster version: v1.14.10

[upgrade/versions] kubeadm version: v1.15.4

[upgrade/confirm] Are you sure you want to proceed with the upgrade? [y/N]: y

[upgrade/prepull] Will prepull images for components [kube-apiserver kube-controller-manager kube-scheduler etcd]

[upgrade/prepull] Prepulling image for component etcd.

[upgrade/prepull] Prepulling image for component kube-controller-manager.

[upgrade/prepull] Prepulling image for component kube-apiserver.

[upgrade/prepull] Prepulling image for component kube-scheduler.

[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-controller-manager

[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-etcd

[apiclient] Found 0 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler

[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-apiserver

[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-etcd

[apiclient] Found 1 Pods for label selector k8s-app=upgrade-prepull-kube-scheduler

[upgrade/prepull] Prepulled image for component etcd.

[upgrade/prepull] Prepulled image for component kube-controller-manager.

[upgrade/prepull] Prepulled image for component kube-apiserver.

[upgrade/prepull] Prepulled image for component kube-scheduler.

[upgrade/prepull] Successfully prepulled the images for all the control plane components

[upgrade/apply] Upgrading your Static Pod-hosted control plane to version "v1.15.4"...

Static pod: kube-apiserver-master hash: 447e7bfe433b589f4cd0f9fda9f5d66b

Static pod: kube-controller-manager-master hash: cdd3739b4a04d4b592796fbabb0d04da

Static pod: kube-scheduler-master hash: 1b5a365f5e2647c21bcfb28d9943aacc

[upgrade/etcd] Upgrading to TLS for etcd

[upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests120368430"

[upgrade/staticpods] Preparing for "kube-apiserver" upgrade

[upgrade/staticpods] Renewing apiserver certificate

[upgrade/staticpods] Renewing apiserver-kubelet-client certificate

[upgrade/staticpods] Renewing front-proxy-client certificate

[upgrade/staticpods] Renewing apiserver-etcd-client certificate

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-apiserver.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2020-04-17-11-09-39/kube-apiserver.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-apiserver-master hash: 447e7bfe433b589f4cd0f9fda9f5d66b

Static pod: kube-apiserver-master hash: 447e7bfe433b589f4cd0f9fda9f5d66b

Static pod: kube-apiserver-master hash: 447e7bfe433b589f4cd0f9fda9f5d66b

Static pod: kube-apiserver-master hash: 984c7d7f5513d61c6834edef74562801

[apiclient] Found 1 Pods for label selector component=kube-apiserver

[upgrade/staticpods] Component "kube-apiserver" upgraded successfully!

[upgrade/staticpods] Preparing for "kube-controller-manager" upgrade

[upgrade/staticpods] Renewing controller-manager.conf certificate

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-controller-manager.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2020-04-17-11-09-39/kube-controller-manager.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-controller-manager-master hash: cdd3739b4a04d4b592796fbabb0d04da

Static pod: kube-controller-manager-master hash: f669d8a04b5413b895d44f661e2c8886

[apiclient] Found 1 Pods for label selector component=kube-controller-manager

[upgrade/staticpods] Component "kube-controller-manager" upgraded successfully!

[upgrade/staticpods] Preparing for "kube-scheduler" upgrade

[upgrade/staticpods] Renewing scheduler.conf certificate

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-scheduler.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2020-04-17-11-09-39/kube-scheduler.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-scheduler-master hash: 1b5a365f5e2647c21bcfb28d9943aacc

Static pod: kube-scheduler-master hash: 005af131c75f8b2bb5add0110835dbda

[apiclient] Found 1 Pods for label selector component=kube-scheduler

[upgrade/staticpods] Component "kube-scheduler" upgraded successfully!

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.15" in namespace kube-system with the configuration for the kubelets in the cluster

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.15" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

[upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.15.4". Enjoy!

[upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you haven't already done so.

ubuntu@master:~$ kubectl version

Client Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.0", GitCommit:"641856db18352033a0d96dbc99153fa3b27298e5", GitTreeState:"clean", BuildDate:"2019-03-25T15:53:57Z", GoVersion:"go1.12.1", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.4", GitCommit:"67d2fcf276fcd9cf743ad4be9a9ef5828adc082f", GitTreeState:"clean", BuildDate:"2019-09-18T14:41:55Z", GoVersion:"go1.12.9", Compiler:"gc", Platform:"linux/amd64"}

ubuntu@master:~$

13..I have tried to create cluster but master status node not ready do you known how to fix it?

A.If your network plugin was not installed or your network plugin was not working properly then your master node will be not ready.

A.If your network plugin was not installed or your network plugin was not working properly then your master node will be not ready.

Thanks

ReplyDeleteToo good article,keep sharing more posts with us.Thank you....

ReplyDeleteDevOps Training

DevOps Online Training

Thanks

Delete